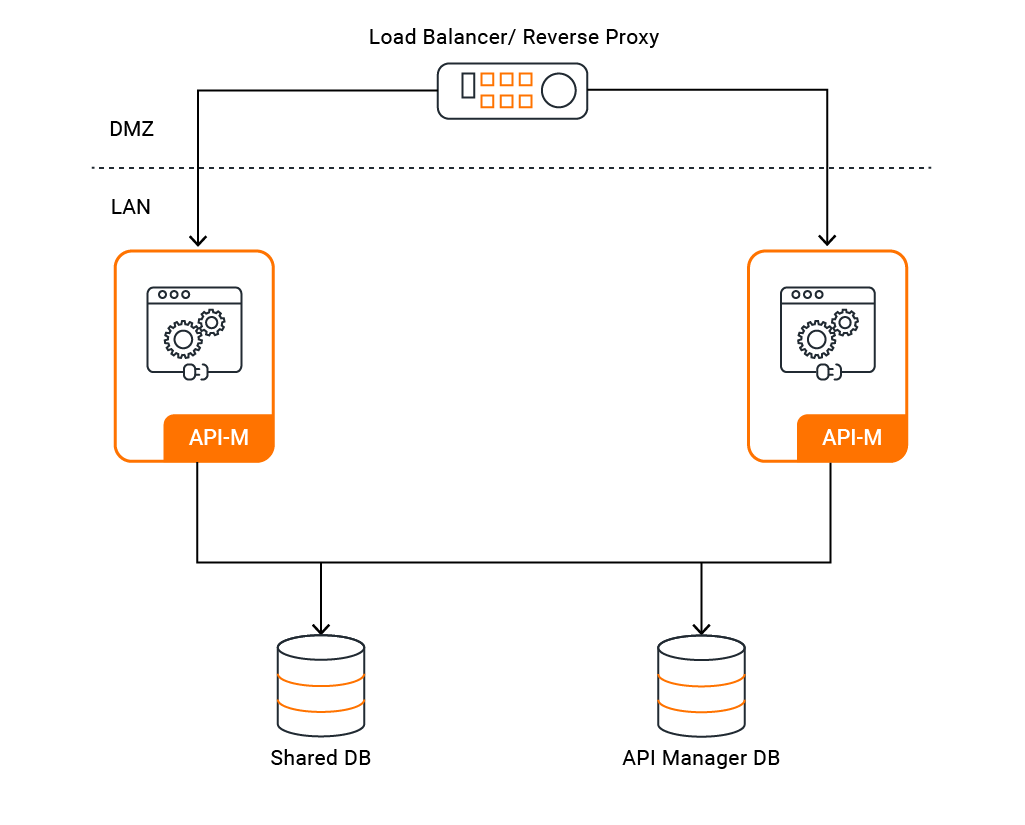

Configuring an Active-Active Deployment¶

This page walks you through how to manually configure MWARE ESB with two active nodes that each has all the components of the API-M together in one instance (all-in-one instance).

Follow the instructions below to configure and deploy API-M by using an Active-Active deployment:

Step 1 - Create a SSL Certificate¶

Info

All MWARE products are by default shipped with a keystore file and truststore file stored in the

<PRODUCT_HOME>/repository/resources/security/ directory. The default keystore that is shipped with a MWARE product,

wso2carbon.jks is configured with private key and self signed public key pair for all purposes, such as encrypting

sensitive information, communicating over SSL etc.

In a production setup, it is advised to set up several different keystores with separate trust chains for different use cases. For more information, see Recommendations for setting up keystores in MWARE products.

To create an all purpose keystore or multiple keystores for authentication and protection of data, follow the steps in Creating New Keystores.

Tip

You should use the same keystore and truststore for SSL in both MWARE ESB instances.

Step 2 - Configure the Load Balancer¶

In order to access the MWARE ESB Portals and Gateway, you need to front the system with a load balancer. You can use any load balancer that is available to your system.

Follow the steps in Configuring the Proxy Server and the Load Balancer to configure the load balancer/reverse proxy which is fronting the API-M nodes in the demiliterized zone (DMZ).

Tip

For example, if you are using the hostname api.am.wso2.com is used to access all portals (publisher, store, admin, and carbon) and gw.am.wso2.com is used to invoke APIs, the deployment.toml in <API-M_HOME>/repository/conf directory of both

nodes, should have the following reverse proxy configurations.

NOTE : Following is a sample configuration. Therefore parameter values might be different.

[server]

hostname = "api.am.wso2.com"

[transport.https.properties]

proxyPort = 443Step 3 - Configure the Databases¶

The WSO2AM_DB and WSO2SHARED_DB databases need to be shared between the two API-M nodes. It is recommended to use an

industry-standard RDBMS databases for this purpose. For more

information on default databases and changing them into RDBMS databases, see Working with Databases.

Tip

If you have configured the apim and shared databases correctly, the deployment.toml in <API-M_HOME>/repository/conf

directory, should have the following configurations.

NOTE : Following is a sample configuration for MySQL 8.0. Therefore parameter values might be different.

[database.apim_db]

type = "mysql"

url = "jdbc:mysql://mysql.wso2.com:3306/WSO2AM_DB?useSSL=false"

username = "wso2carbon"

password = "wso2carbon"

driver="com.mysql.cj.jdbc.Driver"

[database.shared_db]

type = "mysql"

url = "jdbc:mysql://mysql.wso2.com:3306/WSO2SHARED_DB?useSSL=false"

username = "wso2carbon"

password = "wso2carbon"

driver="com.mysql.cj.jdbc.Driver"Step 4 - Configure the Second MWARE ESB Node¶

Make a copy of the active instance configured above and use this copy as the second active instance.

Info

When making a copy of the node, you need to also make a copy of the SSL certificate that you created for node 1 in step 1.

Step 5 - Configure the Artifact Synchronization¶

To enable synchronization for runtime artifacts of the two all in one MWARE ESB instances, it is recommended to have a shared file system. Configure a shared file system as the content synchronization mechanism. You can use a common shared file system such as Network File System (NFS) or any other shared file system that is available.

You need to mount the following folders of the two nodes to the shared file system, in order to share the resources between all the nodes.

<API-M_HOME>/repository/deployment/server/userstores- If a secondary user store has been configured in the super tenant, this folder needs to be backed up.<API-M_HOME>/repository/tenants- If tenancy is used and any secondary userstores are configured for the tenants.

NFS configuration

For more information on setting up NFS on Ubuntu, see Network File System (NFS). Note that these configurations may change depending on the OS.

Step 6 - Configure Publisher with the Gateway¶

When underlined file system is shared, the artifacts are available to both Gateway nodes. Therefore, a single node

can publish the API artifacts to their own nodes. Therefore, you can point the service_url to localhost in the

deployment.toml of both nodes.

[[apim.gateway.environment]]

...

service_url = "https://localhost:${mgt.transport.https.port}/services/"

...Step 7 - Configure Gateway URLs to Expose APIs¶

You need to configure the environment URLs which are used to expose the deployed APIs in the Gateways of both nodes.

Add the Gateway hostname when you configure environments in the <API-M_HOME>/repository/conf/deployment.toml file in both

API-M nodes.

Update the endpoints with your chosen hostname for Gateways as shown below.

In this case, let's use gw.am.wso2.com as the hostname.

[[apim.gateway.environment]]

...

ws_endpoint = "ws://gw.am.wso2.com:9099"

wss_endpoint = "wss://gw.am.wso2.com:8099"

http_endpoint = "http://gw.am.wso2.com:${http.nio.port}"

https_endpoint = "https://gw.am.wso2.com:${https.nio.port}"Step 8 - Configure Rate Limiting¶

-

Configure the data publisher in the

apim.throttling.url_groupsection which comes under theapim.throttling.url_groupblock in the<API-M_HOME>/repository/conf/deployment.tomlfile of both nodes.-

You need to update these configurations so that the Gateway can publish data to the Traffic Manager in its own node and the Traffic Manager in the other node, so that the same event is sent to both servers at the same time.

The ESB Complex Event Processor (ESB CEP) component that lies within the Traffic Manager acts as the data receiver and processes the data to come up with Throttling decisions.

Node1

[apim.throttling] event_duplicate_url = ["tcp://<node2-hostname>:<node2-port>"] [[apim.throttling.url_group]] traffic_manager_urls = ["tcp://<node1-hostname>:<node1-port>"] traffic_manager_auth_urls = ["ssl://<node1-hostname>:<node1-port>"] type = "loadbalance" [[apim.throttling.url_group]] traffic_manager_urls = ["tcp://<node2-hostname>:<node2-port>"] traffic_manager_auth_urls = ["ssl://<node2-hostname>:<node2-port>"] type = "loadbalance"[apim.throttling] event_duplicate_url = ["tcp://127.0.0.1:5673"] [[apim.throttling.url_group]] traffic_manager_urls = ["tcp://127.0.0.1:9611"] traffic_manager_auth_urls = ["ssl://127.0.0.1:9711"] type = "loadbalance" [[apim.throttling.url_group]] traffic_manager_urls = ["tcp://127.0.0.1:9612"] traffic_manager_auth_urls = ["ssl://127.0.0.1:9712"] type = "loadbalance"Node2

[apim.throttling] event_duplicate_url = ["tcp://<node1-hostname>:<node1-port>"] [[apim.throttling.url_group]] traffic_manager_urls = ["tcp://<node1-hostname>:<node1-port>"] traffic_manager_auth_urls = ["ssl://<node1-hostname>:<node1-port>"] type = "loadbalance" [[apim.throttling.url_group]] traffic_manager_urls = ["tcp://<node2-hostname>:<node2-port>"] traffic_manager_auth_urls = ["ssl://<node2-hostname>:<node2-port>"] type = "loadbalance"[apim.throttling] event_duplicate_url = ["tcp://127.0.0.1:5672"] [[apim.throttling.url_group]] traffic_manager_urls = ["tcp://127.0.0.1:9611"] traffic_manager_auth_urls = ["ssl://127.0.0.1:9711"] type = "loadbalance" [[apim.throttling.url_group]] traffic_manager_urls = ["tcp://127.0.0.1:9612"] traffic_manager_auth_urls = ["ssl://127.0.0.1:9712"] type = "loadbalance" -

Save your changes.

-

Note

- Configure Rate Limiting for the API Gateway Cluster so that the request counters will be replicated across the API Gateway cluster when working with multiple API Gateway nodes.

- Configuring Rate Limiting for the API Gateway cluster is not applicable if you have a clustered setup.

Step 9 - Optionally, enable distributed cache invalidation¶

Add following configuration block in the <API-M_HOME>/repository/conf/deployment.toml file of both the nodes.

[apim.cache_invalidation]

enabled = trueStep 10 - Configure API-M Analytics¶

ESB Analytics is delivered via the ESB Analytics cloud solution. You need to configure the ESB Gateway to publish analytics data into the cloud.

See the instructions on configuring the API Gateway with the cloud-based analytics solution.

Step 11 - Configure Production Hardening¶

In a production setup, ensure that you have taken into account the respective security hardening factors (e.g., changing and encrypting the default passwords, configuring JVM security etc.) and other production deployment guidelines (e.g., tuning parameters, backup and recovery recommendations etc.) before deploying MWARE ESB nodes.

For more information on security hardening guidelines, see Security Guidelines for Production Deployment.

For more information on other production deployment guidelines, see Production Deployment Guidelines.

Step 12 - Start the MWARE ESB Servers¶

Start the MWARE ESB servers using the standard start-up script. For more information, see Starting the server.

cd <API-M_HOME>/bin/

sh api-manager.shcd <API-M_HOME>\bin\

api-manager.bat --run Info

If you want to deploy MWARE ESB using a hybrid active-active deployment pattern, where MWARE IAM is used as the Key Manager in high availability mode while the rest of the MWARE ESB components are all in one node, configure and start the Key Manager (e.g., configure and start MWARE IAM as the Key Manager) before starting the API-M servers.